Xiao Liu (Leo)

- Mountain View, California

- ThinkBeyondPossible

- [email protected]

- @its_hey_leo

- @liuxiao1468

- My Scholar Profile

- My LinkedIn Profile

I am currently a Senior Research Scientist in the Robot Intelligence Lab at Samsung Research America, where we are developing various robotic researches along with Foundation models. My research focuses on Robot Learning, Representation Learning, and Long-horizon task reasoning. My work has been presented at conferences such as CoRL, ICRA, and IROS. I earned my Ph.D. in Computer Science from the School of Computing and Augmented Intelligence at Arizona State University, supervised by Prof. Heni Ben Amor, and completed my Master’s at Case Western Reserve University under the guidance of Prof. Kiju Lee. Please feel free to review my curriculum vitae for further details.

Work Experiences

Senior Researcher, Samsung Research America

- Conduct research at Robot Intelligence Lab lead by Prof. Kris Hauser (will share more when ready)

Postdoc Scientist, Honda Research Institute

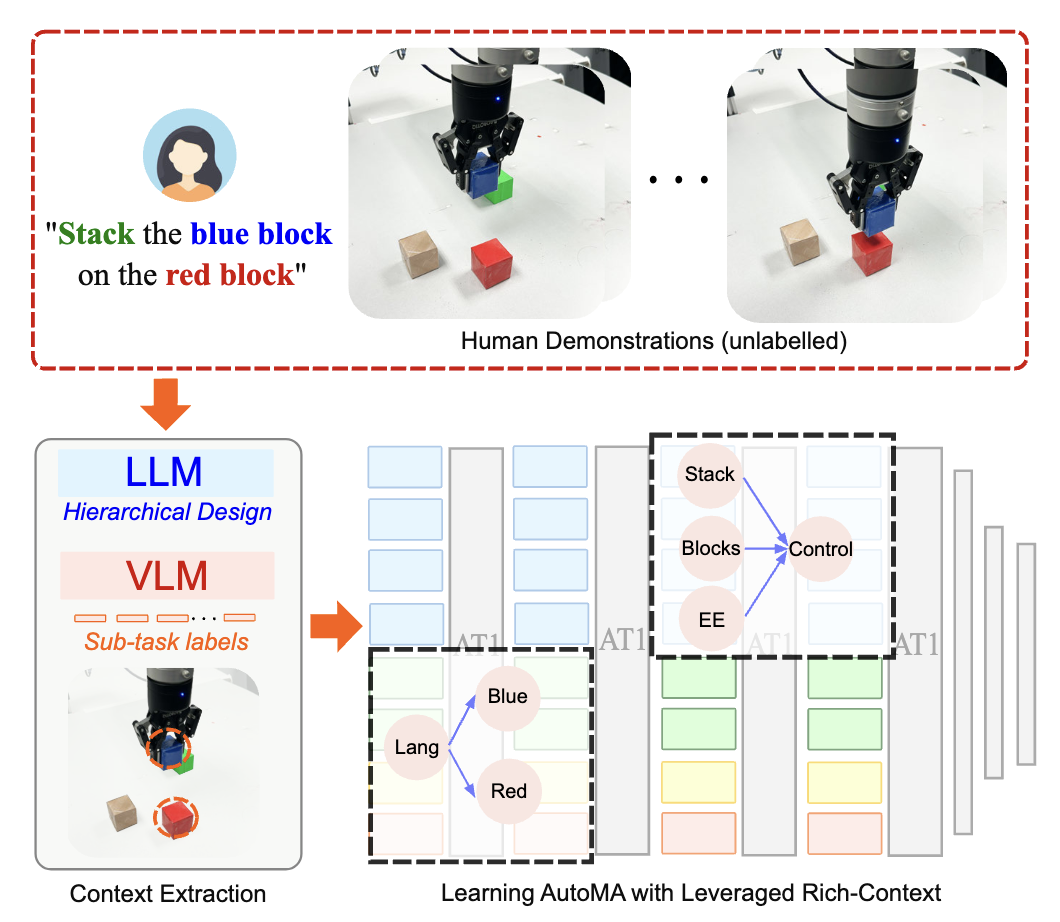

- System I&II: Create Intelligent Context Reasoning Agent via Large Vision Model and LLM, providing contexts for Long-horizon dexterous action policy.

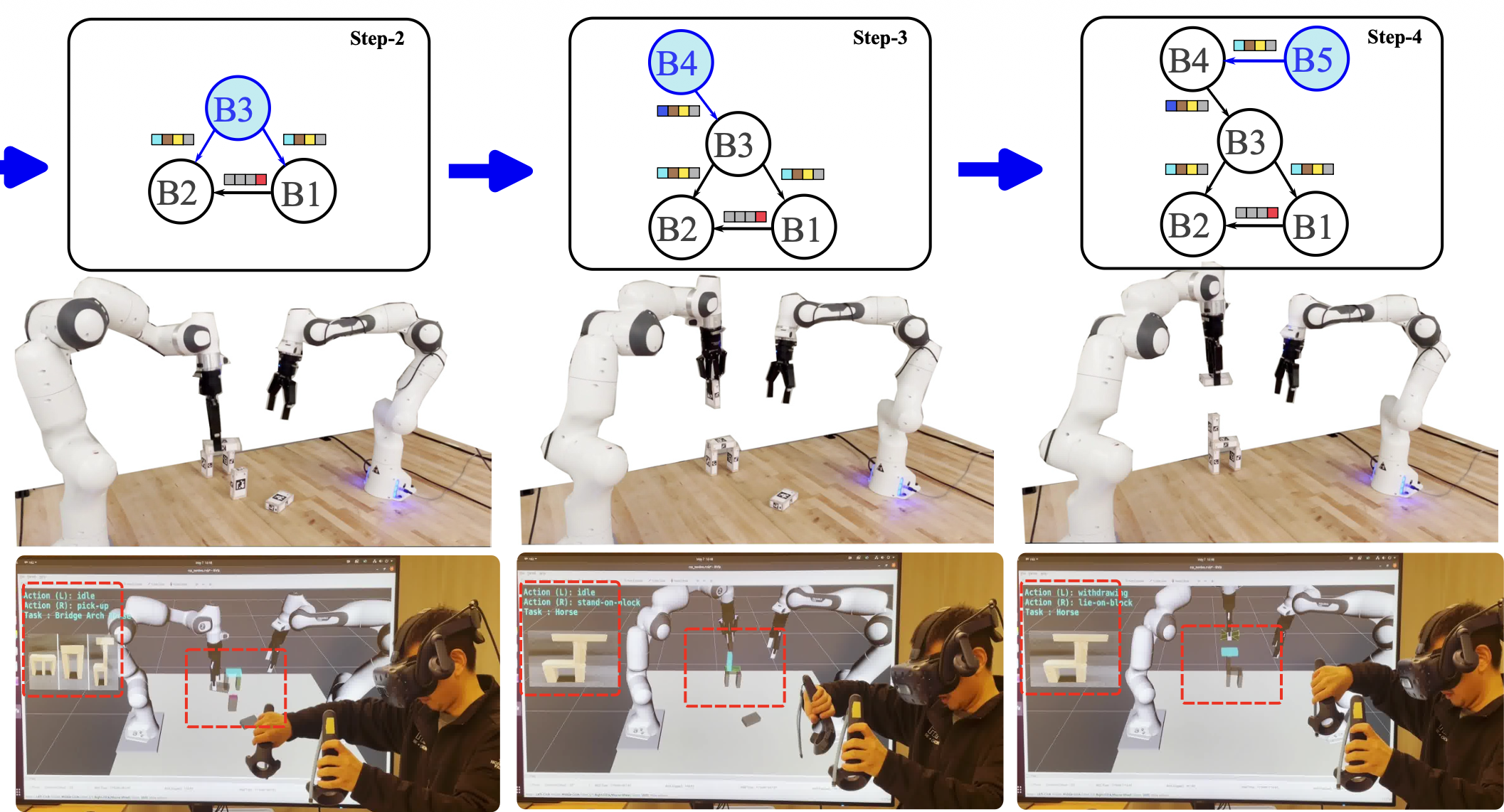

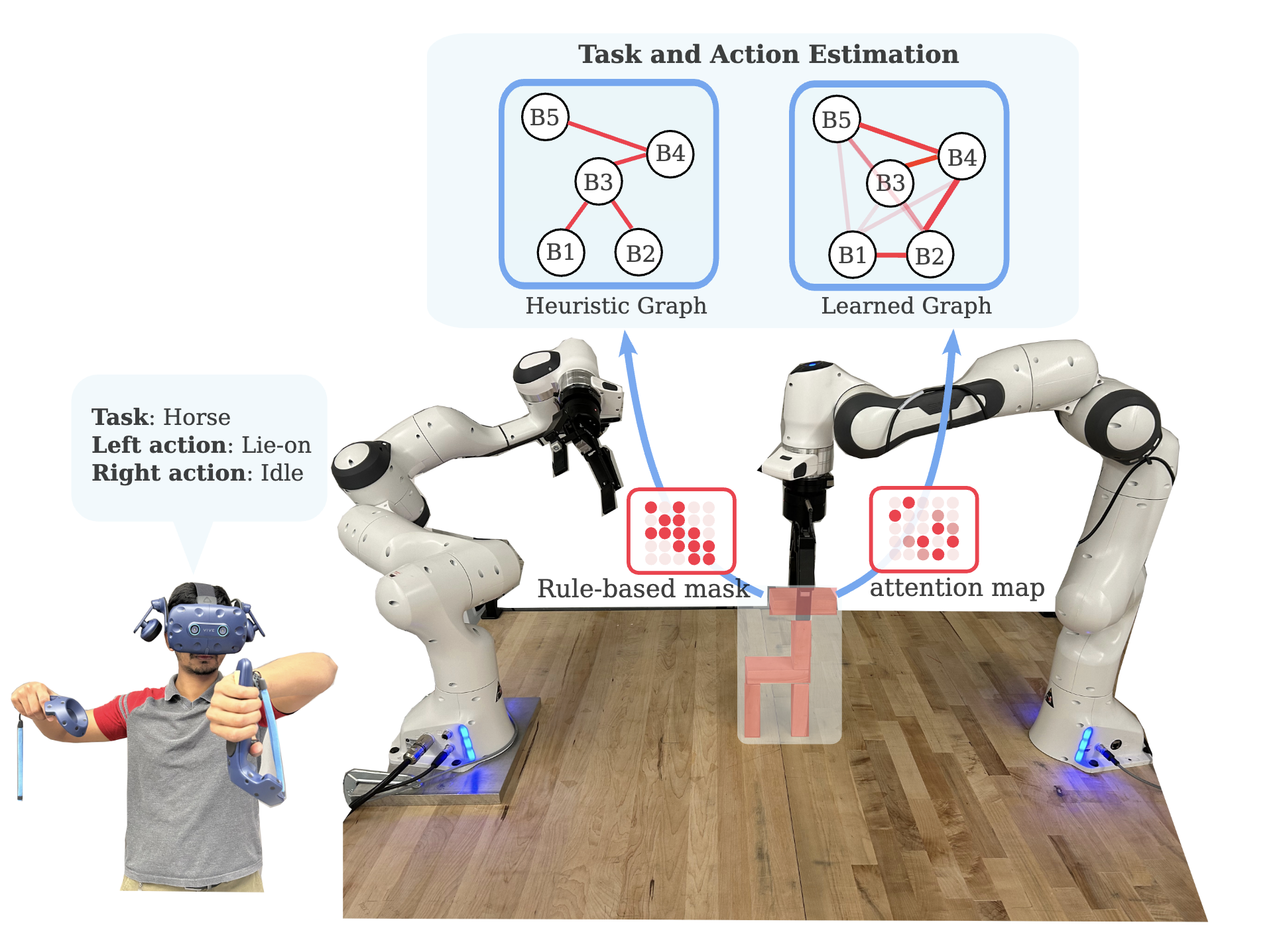

- Developed a scene graph‑based assistant generation framework for a teleoperated avatar robot. This framework facilitates task understanding, encodes spatial relationships during rearrangement tasks, and constructs planning‑capable graphs using graph edit distance.

Research Associate, Interactive Robotics Lab

Research topics: Robot Learning via Deep State-Space Modeling

- Embodied AI: Proposed Diff-Control, an action diffusion policy incorporating ControlNet from the domain of image generation to robot actions generation.

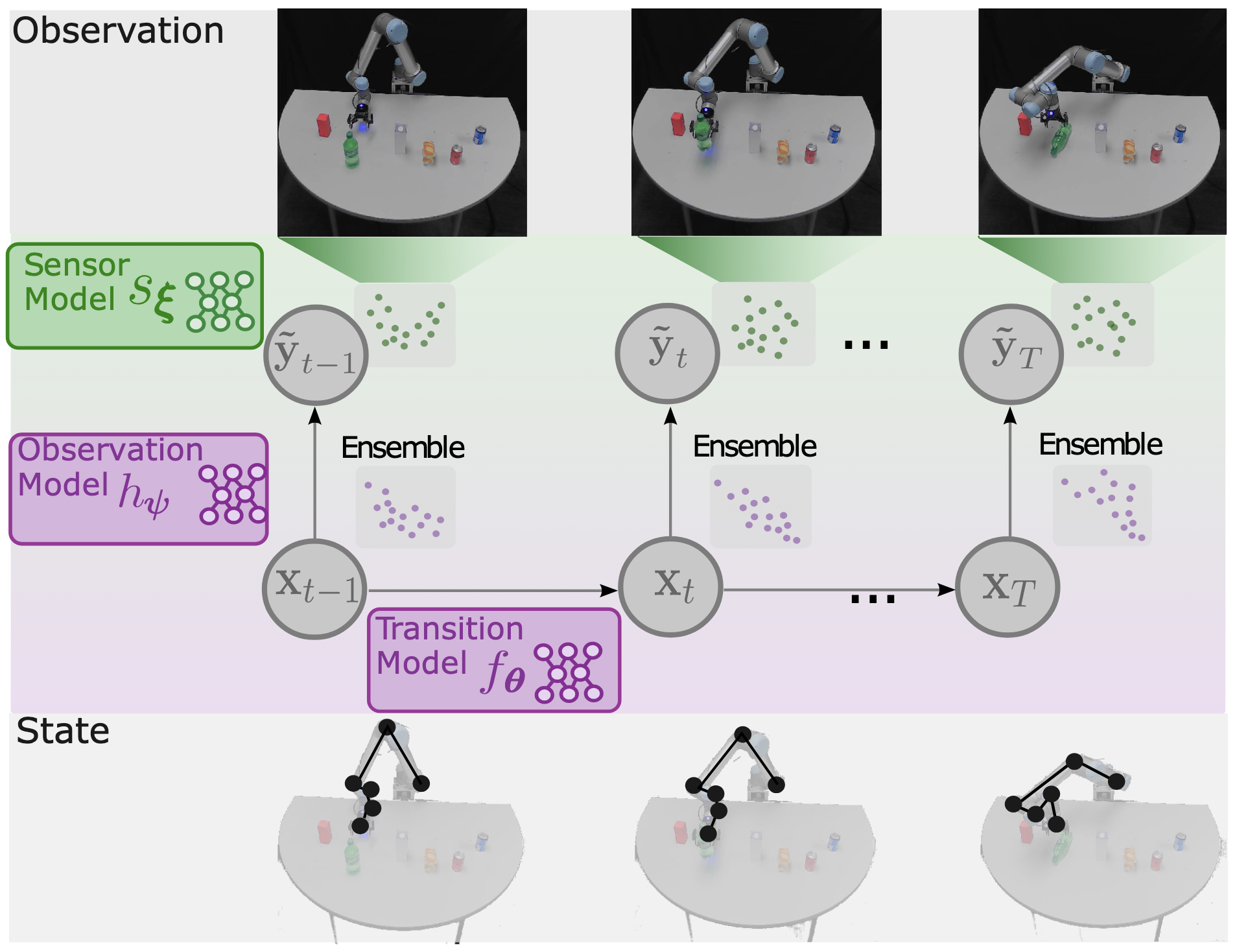

- Created a multimodal learning framework (α-MDF) using attention mechanism and differentiable filtering, which conducts state estimation in latent space with multiple modalities.

- Developed differentiable Ensemble Kalman Filters framework incorporating algorithmic priors for robot imitation learning.

Research Engineer (part-time), RadiusAI

Worked on multiple projects with different research items across computer vision, differentiable filters, optimizations.

- Developed and refined Multi-object tracking (MOT) algorithms using Bayes Filter for Video Analytics for indoor and outdoor cameras.

- Developed monocular depth prediction models with varied advanced architecture-Vision Transformer (ViT) and multi-scale local planar guidance (LPG) blocks

- Developed multi-objective optimization technique base on Frank-Wolfe algorithm for training across multiple datasets

Research Assistant, CWRU

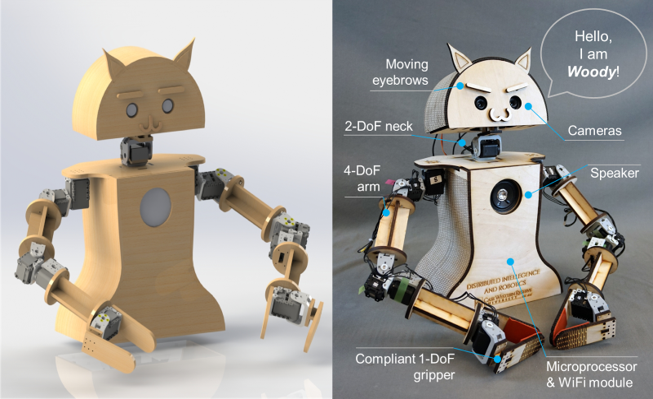

Social robot project “Woody and Philos” team leader, collaborated research assistant of “e-Cube” project for human cognitive skill assessment.

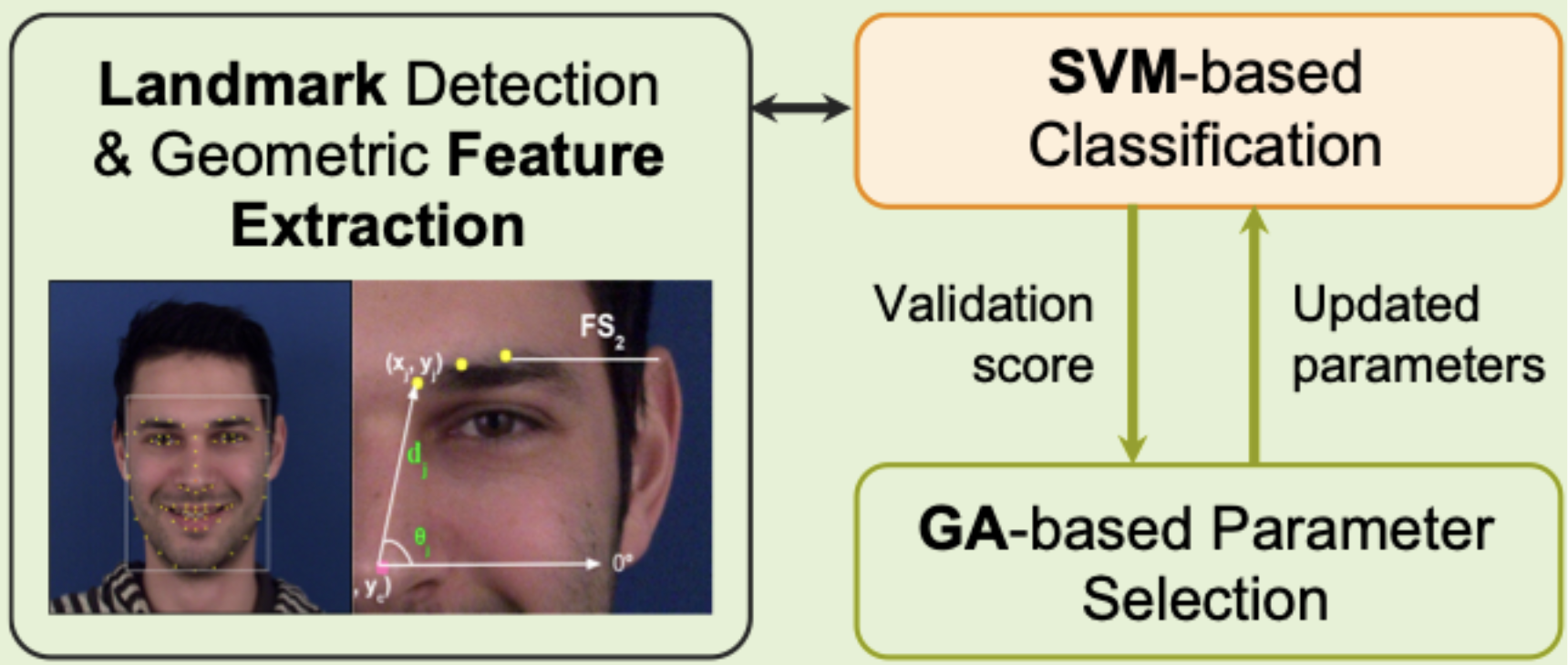

- Human Facial Emotion Expression Recognition for Human-robot Interaction Case Western Daily

- Human centered biomedical devices–“e-Cube” for cognitive skills assessment

- Social robots–“Philos” & “Woody” ideastream

Publications, Presentations, …

Xiao Liu, Prakash Baskaran, Simon Manschitz, Wei Ma, Dirk Ruiken, Soshi Iba

IEEE International Conference on Robotics and Automation (ICRA) , 2026

Paper

Prakash Baskaran, Xiao Liu, Songpo Li, Soshi Iba

IEEE International Conference on Intelligent Robots and Systems (IROS) , 2025

Paper

Yifan Zhou, Xiao Liu, Quan Vuong, Heni Ben Amor

IEEE International Conference on Robotics and Automation (ICRA), submitted , 2025

Project Page / Paper

Xiao Liu, Yifan Zhou, Fabian Weigend, Shuhei Ikemoto, Heni Ben Amor

IEEE International Conference on Intelligent Robots and Systems (IROS) , 2024

Project Page / Paper

Xiao Liu, Fabian Weigend, Yifan Zhou, Heni Ben Amor

ICRA 2024 Workshop - Robot Learning Going Probabilistic , 2024

Project Page / Paper

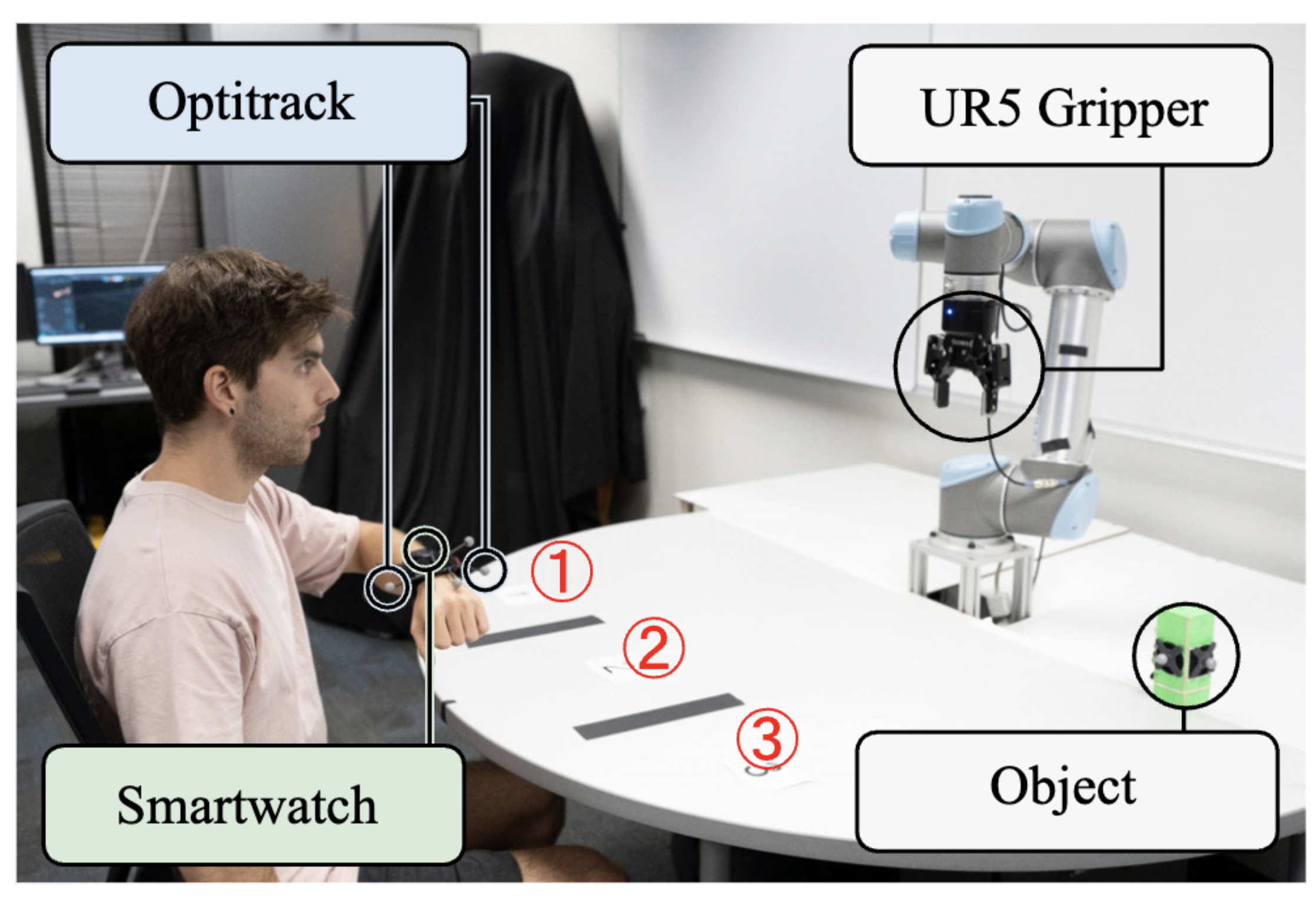

Fabian Weigend, Xiao Liu, Shubham Sonawani, Heni Ben Amor

IEEE International Conference on Robotics and Automation (ICRA) , 2024

Project Page / Paper / Video

Xiao Liu, Yifan Zhou, Shuhei Ikemoto, Heni Ben Amor

CoRL 2023 Workshop on Learning for Soft Robots , 2023

Project Page / Paper

Xiao Liu, Yifan Zhou, Shuhei Ikemoto, Heni Ben Amor

7th Conference on Robot Learning (CoRL) , 2023

Project Page / Paper / Video

Fabian Weigend, Xiao Liu, Heni Ben Amor

IEEE IROS Workshop DiffPropRob , 2023

Project Page / Paper

Xiao Liu, Geoffrey Clark, Joseph Campbell, Yifan Zhou, Heni Ben Amor

IEEE International Conference on Intelligent Robots and Systems (IROS) , 2023

Project Page / Paper

Xiao Liu, Shuhei Ikemoto, Yuhei Yoshimitsu, Heni Ben Amor

IEEE International Conference on Intelligent Robots and Systems (IROS) , 2023

Project Page / Paper

Xiao Liu, Xiangyi Cheng, Kiju Lee

IEEE Sensors Journal 21, no. 10: 11532-11542 , 2021

Code / Paper

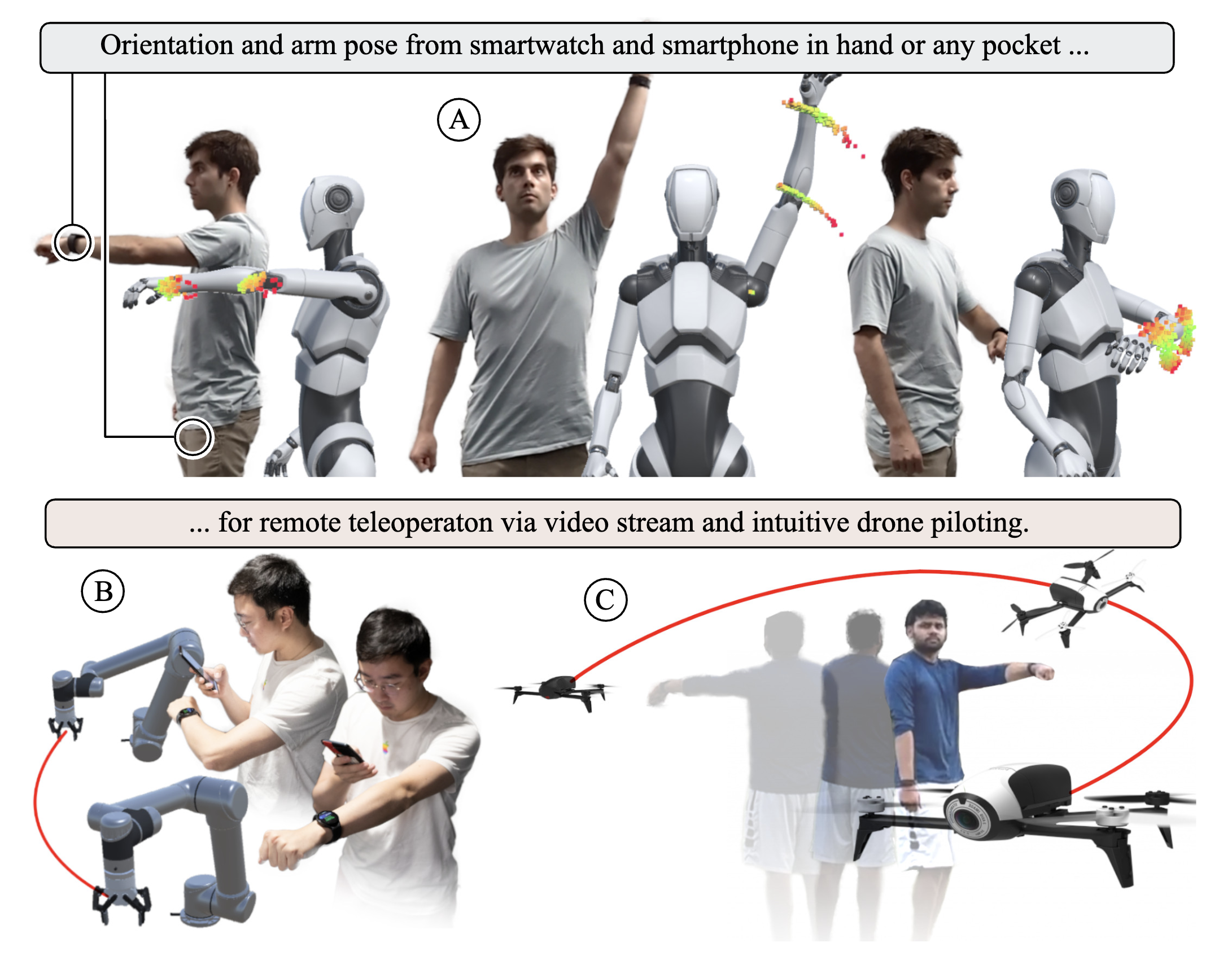

Daniel Hayosh, Xiao Liu, Kiju Lee

IEEE 17th International Conference on Ubiquitous Robots (UR), pp. 247-252 , 2020

Project Page / Paper

Xiao Liu, Kiju Lee

IEEE Games Entertainment and Medias Conference. pp. 1-9 , 2018

Paper

Xiao Liu, Xiangyi Cheng, Kiju Lee. “e-Cube: Vision-based Interactive Block Games for Assessing Cognitive Skills: Design and Preliminary Evaluation,” CWRU ShowCASE.

Xiao Liu, Daniel Hayosh, Kiju Lee. “Woody: A New Prototype of Social Robotic Platform Integrated with Facial Emotion Recognition for Real-time Application,” CWRU ShowCASE.